There’s no such thing as a complete vacuum. Even in the cosmic void between galaxies, there’s an estimated density of about one hydrogen or helium atom per cubic meter. But these estimates are largely theoretical—no one has yet launched a sensor into intergalactic space and beamed back the result. On top of that, we have no means of measuring vacuums that low.

At least, not yet.

Researchers are now developing a new vacuum-measurement tool that may be able to detect lower densities than any existing techniques can. This new quantum sensor uses individual atoms, cooled to just shy of absolute zero, to serve as targets for stray particles to hit. These atom-based vacuum measurers can detect lower atomic concentrations than ever before, and they don’t require calibration, making them a good candidate to serve as a standard.

“The atom was already our standard for time and frequency,” says Kirk Madison, professor of physics at the University of British Columbia (UBC), in Vancouver, and one of the pioneers of cold-atom-based vacuum-measurement technology. “Wouldn’t it be cool if we could make an atom the standard for vacuum measurement as well?”

This quantum-sensor technology promises a dual achievement in scale: Not only does it extend our ability to measure incredibly rarefied conditions with unprecedented sensitivity, it also establishes the fundamental reference point that defines the scale itself. By eliminating the need for calibration and serving as a primary standard, this atom-based approach doesn’t just measure the farthest edges of the density spectrum—it could become the very ruler by which all other vacuum measurements are compared.

Vacuum measurement on Earth

While humans haven’t yet succeeded in making vacuum as pure as it is in deep space, many earthly applications still require some level of emptiness. Semiconductor manufacturing, large physics experiments in particle and wave detection, some quantum-computing platforms, and surface-analysis tools, including X-ray photoelectron spectroscopy, all require so-called ultrahigh vacuum.

At these low levels of particles per unit volume, vacuum is parameterized by pressure, measured in pascals. Regular atmospheric pressure is 105 Pa. Ultrahigh vacuum is considered to be anything less than about 10-7 Pa. Some applications require as low as 10-9 Pa. The deepest depths of space still hold the nothingness record, reaching below 10-20 Pa.

The method of choice for measuring pressure in the ultrahigh vacuum regime is the ionization gauge. “They work by a fairly straightforward mechanism that dates back to vacuum tubes,” says Stephen Eckel, a member of the cold-atom vacuum-measurement team at the National Institute of Standards and Technology (NIST).

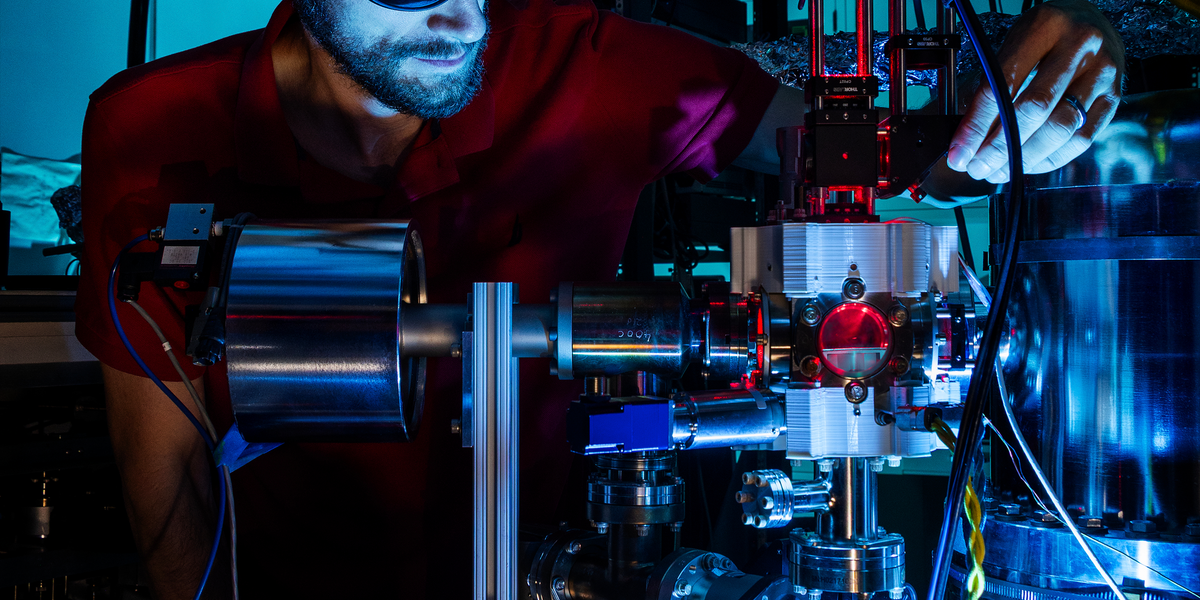

A portable cold-atom vacuum-measurement tool [top] detects the fluorescence of roughly 1 million lithium atoms [bottom], and infers the vacuum pressure based on how quickly the fluorescence decays. Photos: Jayme Thornton

A portable cold-atom vacuum-measurement tool [top] detects the fluorescence of roughly 1 million lithium atoms [bottom], and infers the vacuum pressure based on how quickly the fluorescence decays. Photos: Jayme Thornton

Indeed, an ionization gauge has the same basic components as a vacuum tube. The gauge contains a heated filament that emits electrons into the chamber. The electrons are accelerated toward a positively charged grid. En route to the grid, the electrons occasionally collide with atoms and molecules flying around in the vacuum, knocking off their electrons and creating positively charged ions. These ions are then collected by a negatively charged electrode. The current generated by these positive ions is proportional to the number of atoms floating about in the vacuum, giving a pressure reading.

Ion gauges are relatively cheap (under US $1,000) and commonplace. However, they come with a few difficulties. First, although the current in the ion gauge is proportional to the pressure in the chamber, that proportionality constant depends on a lot of fine details, such as the precise geometry of the filament and the grid. The current cannot be easily calculated from the electrical and physical characteristics of the setup—ion gauges require thorough calibrations. “A full calibration run on the ion gauges is like a full month of somebody’s time,” says Daniel Barker, a physicist at NIST who’s also working on the cold-atom vacuum-measurement project.

Second, the calibration services provided by NIST (among others) calibrate down to only 10-7 Pa. Performance below that pressure is questionable, even for a well-calibrated gauge. What’s more, at lower pressures, the heat from the ion gauge becomes a problem: Hotter surfaces emit atoms in a process called outgassing, which pollutes the vacuum. “If you’re shooting for a vacuum chamber with really low pressures,” Madison says, “these ionization gauges actually work against you, and many people turn them off.”

Third, the reading on the ion gauge depends very strongly on the types of atoms or molecules present in the vacuum. Different types of atoms could produce readings that vary by up to a factor of four. This variance is fine if you know exactly what’s inside your vacuum chamber, or if you don’t need that precise a measurement. But for certain applications, especially in research settings, these concerns are significant.

How a cold-atom vacuum standard works

The idea of a cold-atom vacuum-measurement tool developed as a surprising side effect of the study of cold atoms. Scientists first started cooling atoms down in an effort to make better atomic clocks back in the 1970s. Since then, cooling atoms and trapping them has become a cottage industry, giving rise to optical atomic clocks, atomic navigation systems, and neutral-atom quantum computers.

These experiments have to be done in a vacuum, to prevent the surrounding environment from heating the atoms. For decades, the vacuum was thought of as merely a finicky factor to be implemented as well as possible. “Vacuum limitations on atom traps have been known since the dawn of atom traps,” Eckel says. Atoms flying around the vacuum chamber would collide with the cooled atoms and knock them out of their trap, leading to loss. The better the vacuum, the slower that process would go.

The most common vacuum-measurement tool in the high-vacuum range today is the ion gauge, basically a vacuum tube in reverse: A hot filament emits electrons that fly toward a positively charged grid, ionizing background atoms and molecules along the way. Jayme Thornton

The most common vacuum-measurement tool in the high-vacuum range today is the ion gauge, basically a vacuum tube in reverse: A hot filament emits electrons that fly toward a positively charged grid, ionizing background atoms and molecules along the way. Jayme Thornton

UBC’s Kirk Madison and his collaborator James Booth (then at the British Columbia Institute of Technology, in Burnaby), were among the first to turn that thinking on its head back in the 2000s. Instead of battling the vacuum to preserve the trapped atoms, they thought, why not use the trapped atoms as a sensor to measure how empty the vacuum is?

To understand how they did that, consider a typical cold-atom vacuum-measurement device. Its main component is a vacuum chamber filled with a vapor of a particular atomic species. Some experiments use rubidium, while others use lithium. Let’s call it lithium between friends.

A tiny amount of lithium gas is introduced into the vacuum, and some of it is captured in a magneto-optical trap. The trap consists of a magnetic field with zero intensity at the center of the trap, increasing gradually away from the center. Six laser beams point toward the center from above, below, the left, the right, the front, and the back. The magnetic and laser forces are arranged so that any lithium atom that might otherwise fly away from the center is most likely to absorb a photon from the lasers, getting a momentum kick back into the trap.

The trap is quite shallow, meaning that hot atoms—above 1 kelvin or so—will not be captured. So the result is a small, confined cloud of really cold atoms, at the center of the trap. Because the atoms absorb laser light occasionally to keep them in the trap, they also reemit light, creating fluorescence. Measuring this fluorescence allows scientists to calculate how many atoms are in the trap.

To use this setup to measure vacuum, you load the atoms into the magneto-optical trap and measure the fluorescence. Then, you turn off the light and hold the atoms in just the magnetic field. During this time, background atoms in the vacuum will chance upon the trapped atoms, knocking them out. After a little while, you turn the light back on and check how much the fluorescence has decreased. This measures how many atoms got knocked out, and therefore how many collisions occurred.

The reason you need the trap to be so shallow and the atoms to be so cold is that these collisions are very weak. “A few collisions are quite energetic, but most of the background gas particles fly by and, like, whisper to the trapped atom, and it just gently moves away,” Madison says.

This method has several advantages over the traditional ion-gauge measurement. The atomic method does not need calibration; the rate at which fluorescence dims depending on the vacuum pressure can be calculated accurately. These calculations are involved, but in a paper published in 2023 the NIST team demonstrated that the latest method of calculation shows excellent agreement with their experiment. Because this technique does not require calibration, it can serve as a primary standard for vacuum pressure, and even potentially be used to calibrate ion gauges.

The cold-atom measurement is also much less finicky when it comes to the actual contents of the vacuum. Whether the vacuum is contaminated with helium or plutonium, the measured pressure will vary by perhaps only a few percent, while the ion gauge sensitivity and reading for these particles might differ by an order of magnitude, Eckel says.

Cold atoms could also potentially measure much lower vacuum pressures than ion gauges can. The current lowest pressure they’ve reliably measured is around 10-9 Pa, and NIST scientists are working on figuring out what the lower boundary might be. “We honestly don’t know what the lower limit is, and we’re still exploring that question,” Eckel says.

No vacuum is completely empty. The degree to which vacuum pressure approaches pure nothingness is measured in pascals, with Earth’s atmosphere clocking in at 105 Pa and intergalactic space at a measly 10-20. In between, the new cold-atom vacuum gauges can measure further along the emptiness scale than the well-established ionization gauges can.

Sources: S. Eckel (cold-atom gauge, ionization gauge); K. Zou (molecular-beam epitaxy, chemical vapor deposition); L. Monteiro, “1976 Standard Atmosphere Properties” (Earth’s atmosphere); E.J. Öpik, Planetary and Space Science (1962) (Mars, moon atmosphere); A. Chambers, ‘Modern Vacuum Physics” (2004) (interplanetary and intergalactic space)

Of course, the cold-atom approach also has drawbacks. It struggles at higher pressure, above 10-7 Pa, so its applications are confined to the ultrahigh vacuum range. And, although there are no commercial atomic vacuum sensors available yet, they are likely to be much more expensive than ion gauges, at least to start.

That said, there are many applications where these devices could unlock new possibilities. At large science experiments, including LIGO (the Laser Interferometer Gravitational-Wave Observatory) and ones at CERN (the European Organization for Nuclear Research), well-placed cold-atom vacuum sensors could measure the vacuum pressure and also help determine where a potential leak might be coming from.

In semiconductor development, a particularly promising application is molecular-beam epitaxy (MBE). MBE is used to produce the few, highly pure semiconductor layers used in laser diodes and devices for high-frequency electronics and quantum technologies. The technique functions in ultrahigh vacuum, with pure elements in separate containers heated on one end of the vacuum. The elements travel across the vacuum until they hit the target surface, where they grow one layer at a time.

Precisely controlling the proportion of the ingredient elements is essential to the success of MBE. Normally, this requires a lot of trial and error, building up thin films and checking whether the proportions are correct, then adjusting as needed. With a cold-atom vacuum sensor, the quantity of each element emitted into the vacuum can be detected on the fly, greatly speeding up the process.

“If this technique could be used in molecular-beam epitaxy or other ultrahigh vacuum environments, I think it will really benefit materials development,” says Ke Zou, an assistant professor of physics at UBC who studies molecular-beam epitaxy. In these high-tech industries, researchers may find that the ability to measure nothing is everything.

This article appears in the October 2025 print issue.

From Your Site Articles

Related Articles Around the Web

#Cold #Atoms #Redefine #Vacuum #Measurement #Standards