Using chatgpt to help you write is like putting a couple of high heels. The reason, according to a China Postgraduate researcher: “It makes my writing look noble and elegant, although I occasionally fall into my face in the academic world.”

This comparison came from a participant in a recent study of students who have adopted generative artificial intelligence in their work. The researchers asked international students who completed postgraduate studies in the United Kingdom Explain AI’s role in your writing using a metaphor.

The answers were creative and diverse: it was said that AI was a spacecraft, a mirror, a drug that improves performance, an autonomous car, makeup, a bridge or fast food. Two people compared the generative AI with Spider-Man, another with the magical map of the merodiers of Harry Potter. These comparisons reveal how the adopters of this technology are feeling their impact on their work during a time when institutions are struggling to draw lines around which the uses are ethical and which do not.

About support for scientific journalism

If you are enjoying this article, consider support our journalism awarded with subscription. When buying a subscription, it is helping to guarantee the future of shocking stories about the discoveries and ideas that shape our world today.

“The generative AI has transformed education dramatically,” says Senior Chin-Hsi Lin studio author, an educational technology researcher at Hong Kong University. “We want students to express their ideas” about how they are using them and how they feel about it, he says.

Lin and their colleagues recruited postgraduate students from 14 regions, including countries such as China, Pakistan, France, Nigeria and the United States, who studied in the United Kingdom and used ChatgPT-4 in their work, which was only available for subscribers paid at that time. Students were asked to arrive and explain a metaphor of the way in which generative AI affects their academic writing. To verify that the 277 metaphors in the responses of the participants were faithful to their real use of technology, the researchers conducted in -depth interviews with 24 of the students and asked them to provide screenshots of their interactions with AI.

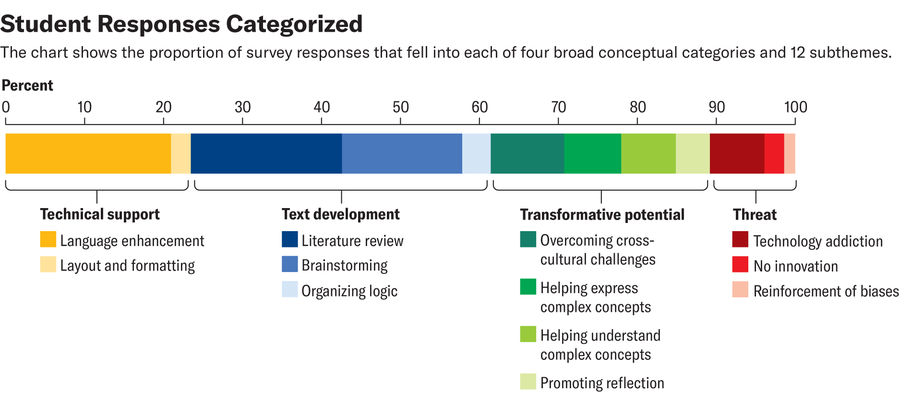

When analyzing the answers, the researchers found four categories about how students used and thought about AI in their work. The most basic of these was the technical support: the use of AI to verify the English grammar or format a list of references. Participants compared AI with aesthetic improvements, such as makeup or high heels, a human role, such as a tutor or language editor, or a mechanical tool such as an packaging machine or a measurement tape.

In the following category, text development, the generative AI was more involved in the writing process itself. Some students used it to organize the logic of their writing; A person equated the Tesla autopilot because he helped them stay along the way. Others used it to help with their literature review and compared it with an assistant, a common metaphor used in AI marketing, or a personal buyer. And the students who used chatbot to help rain of ideas often used metaphors that described technology as a guide. They called him a compass, a partner, a bus driver or a magical map.

In the third category, the students used AI to more significantly transform their writing process and final product. Here, they called technology a “bridge” or a “teacher” that could help them overcome intercultural limits in communication styles, especially important because academic writing is often done in English. Eight people described him as a mental reader because, to cite a participant, he helped express “those deeply nuanced concepts that are difficult to articulate.”

Others said they really helped them to understand those difficult concepts, especially when taking out of different disciplines. Three people compared him to a spacecraft and two with Spider-Man: “Because he can quickly navigate through the complex academic information network” in all disciplines.

In the fourth category, students’ metaphors highlighted the potential dangers of AI. Some of the participants expressed discomfort with the way it allows a lack of innovation (such as a painter who simply copy the work of others) or a deeper lack of understanding (such as fast, convenient but not nutritious food). In this category, students more commonly called a medicine, especially addictive. A particularly adequate response compared it to steroids in sports: “In a competitive environment, nobody wants to be left behind because they don’t use it.”

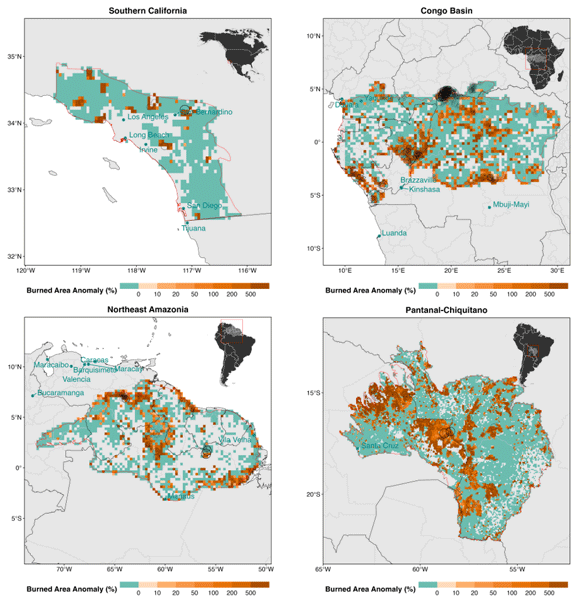

Amanda Montañez; Source: “High heels, compasses, Spider-Man or Drug? 228; April 2025 (data)

“Metaphors really matter, and have shaped public speech” for all types of new technologies, says Emily Weinstein, a technology researcher at the Digital Digital Center of Harvard University, which did not participate in the new study. The comparisons we use to talk about new technologies can reveal our assumptions about how they work, and even our blind points.

For example, “there are implicit threats in the other metaphors that are here,” she says. Driver assistance systems sometimes cause a blockade. Mental readers of a fantasy world or magical maps cannot be explained by science, but simply have to be reliable. And the high heels, as the participant stood out, can make you more than fall.

Weinstein says that there is never an adequate metaphor to talk about a new technology. For example, drug or cigarette metaphors are very common when people talk about social networks, and somehow are suitable. Applications such as Tiktok and Instagram can be genuinely addictive and are often directed to adolescence. But when we try to assign only a metaphor to a new technology, we run the risk of flattening it and ignoring its benefits and dangers.

“If your mental social networks model is that it is crack [cocaine]It will be difficult for us to have a conversation about moderating use, for example, ”she says.

And culturally, our mental models of generative still lack seriously. “The problem is that at this time we lack ways to talk about details. There is so much moral panic and reaction,” she says. But “I think that many of the things that give us this moral and emotional reaction … It has to do with the fact that we do not have language or ways of speaking more specifically” about what we want from this technology.

Creating this new language will require more listening and discussion in the classroom, perhaps even by assignment. This can relieve pressure on teachers to understand each potential use of AI and make sure students do not stay in a gray area without orientation. For certain tasks, teachers and advisors may want to allow students to use the generative AI as a compass to make a rain of ideas or as the man of the spider of their Gwen Stacy to help them go through the world network.

“There are different learning objectives for different tasks and different contexts,” says Weinstein. “And sometimes your goal might not be in tension with a more transformative use.”

#Chatgpt #drug #Metaphors #show #students