My colleagues and me At the University of Purdue it has discovered a significant imbalance in human values integrated in AI systems. The systems were predominantly oriented towards information and utility values and less towards prosocial, well -being and civic values.

In the heart of many AI systems are large collections of images, text and other forms of data used to train models. While these data sets are meticulously cured, it is not uncommon for them to contain unusual or prohibited content.

To ensure that the AI systems do not use harmful content when responding to users, the researchers introduced a method called Reinforcement learning from human feedback. Researchers use highly cured data sets of human preferences to shape the behavior of AI systems to be useful and honest.

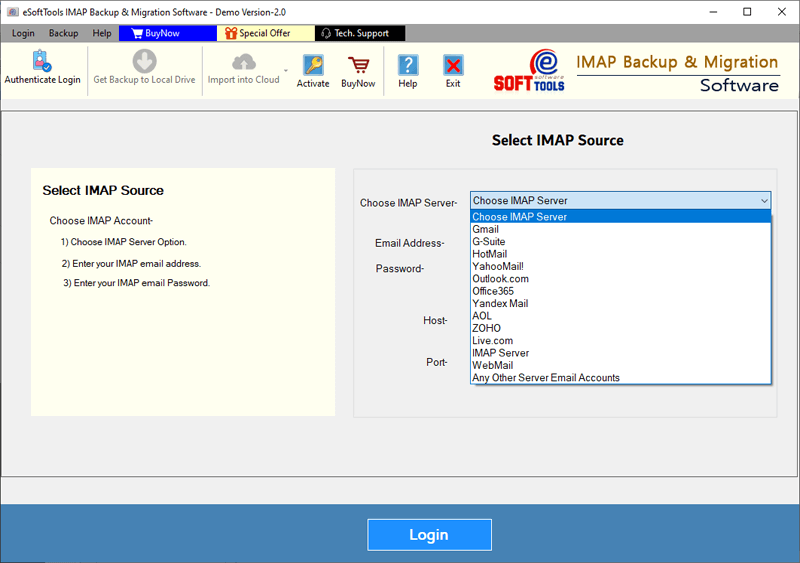

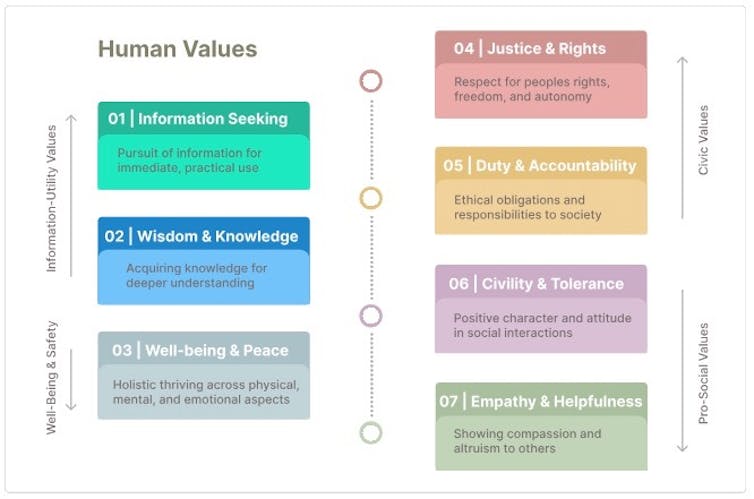

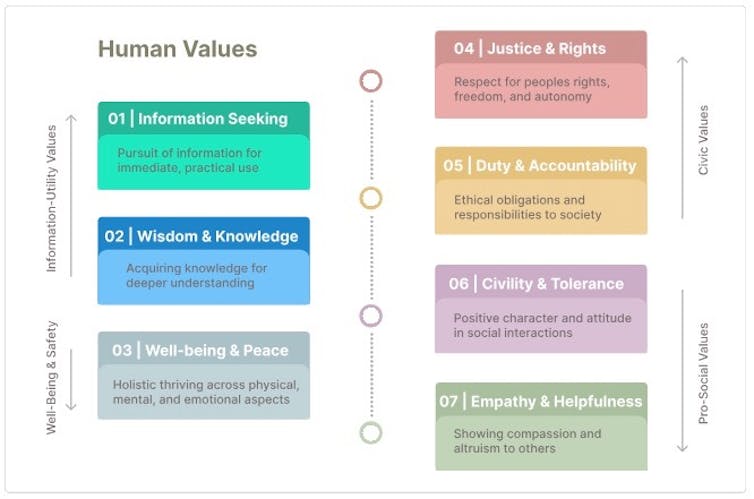

In our study, We examine Three sets of open source training data used by the main US companies. of science, technology and society. Values are well -being and peace; information search; Justice, Human Rights and Animal Rights; duty and responsibility; Wisdom and knowledge; civility and tolerance; and empathy and help. We use taxonomy to manually write down a data set, and then use the entry to train an AI language model.

Our model allowed us to examine the data sets of AI companies. We discovered that these data sets contained several examples that train AI systems to be useful and honest when users ask questions such as “how do I reserve a flight?” Data sets contained very limited examples of how to answer questions about issues related to empathy, justice and human rights. In general, wisdom, knowledge and information search were the two most common values, while justice, human rights and animal rights was the least common value.

Obi et al, CC By -nd

Why does it matter

The imbalance of human values in the data sets used to train AI could have significant implications on how AI systems interact with people and address complex social problems. As you integrate more in sectors such as law, health care and social networksIt is important that these systems reflect a balanced spectrum of collective values to ethically serve the needs of people.

This investigation also arrives at a crucial moment for the government and those in charge of formulating policies as society fights with questions about questions Government and ethics of AI. Understanding the values integrated in AI systems is important to ensure that they serve the best interests of humanity.

What other research is being done

Many researchers are working to align AI systems with human values. The introduction of learning reinforcement of human feedback It was innovative Because it provided a way of guiding the behavior of AI to be useful and sincere.

Several companies are developing techniques to prevent harmful behaviors in AI systems. However, our group was the first to introduce a systematic way of analyzing and understanding what values were really being integrated into these systems through these data sets.

What follows?

By visible the integrated values in these systems, our goal is to help companies to create more balanced data sets that better reflect the values of the communities they serve. Companies can use our technique to find out where they are not doing well and then improve the diversity of their AI training data.

The companies that we study no longer use those versions of their data sets, but can still benefit from our process to ensure that their systems are aligned with social values and norms in the future.![]()

![]()

IKE OBIPh.D. Information technology and information technology, University of Purdue

This article was republished from The conversation Under a Creative Commons license. Read the Original article.

#Research #shows #data #sets #human #values #blind #spots