A new IA coding challenge has revealed its first winner, and established a new bar for software engineers with AI.

On Wednesday at 5 PM PT, the non -profit institute Laude Institute announced the first winner of the K Prize, an AI of multiple round Coding challenge Launched by Databricks and the perplexity co -founder Andy Konwinski. The winner was a Brazilian fast engineer named Eduardo Rocha de Andrade, who will receive $ 50,000 for the prize. But more surprising than victory was his final score: he won with correct answers to only 7.5% of the questions in the test.

“We are glad to have built a reference point that is really difficult,” Konwinski said. “The reference points should be difficult if they are going to matter,” he continued, adding: “The scores would be different if the big laboratories had entered with their biggest models. But that is the point.

Konwinski has promised $ 1 million to the first open source model that can obtain more than 90% in the test.

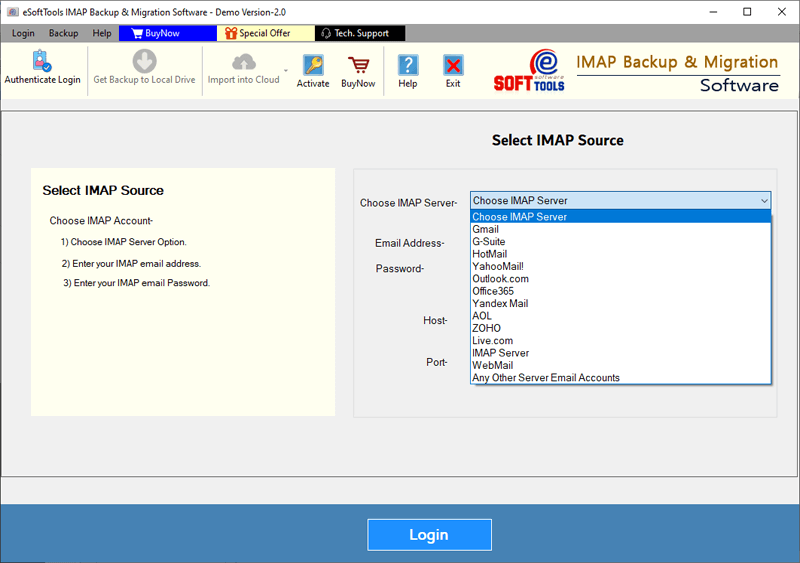

Similar to the well-known SWE-BENCH system, the K prize proves the moderate problems of Github as proof of how well models can deal with real world programming problems. But while Swe-Bench is based on a fixed set of problems with which the models can train, the K prize is designed as a “version without Swe-Bench contamination”, using a timed input system to protect against any specific reference training. For the first round, the models had to appear before March 12. The K -prize organizers then built the test using only github problems marked after that date.

The upper 7.5% score is in marked contrast with Swe-Bench, which currently shows a higher score of 75% in its “easier” verified “test and 34% in its hardest” complete “test. Konwinski is not still sure whether the disparity is due to pollution in the SWE bank or simply to the challenge of collecting new problems of Github, but expects the K project K of the prize answer the question soon.

“As we get more races of the thing, we will have a better meaning,” he told TechCrunch, “because we hope that people adapt to the dynamics of competing in this every few months.”

Techcrunch event

San Francisco

|

October 27, 2025

It may seem like a strange place to fall short, given the wide range of IA coding tools already publicly available, but with the reference points that become too easy, many critics see projects such as the K award as a necessary step to resolve The growing AI evaluation problem.

“I am quite optimistic about the construction of new tests for the existing reference points,” says Princeton Sayash Kapoor researcher, who presented a similar idea In a recent article. “Without such experiments, we can’t really say if the problem is pollution, or even simply go to the Swe-Bench classification table with a human in the loop.”

For Konwinski, it is not only a better reference point, but an open challenge for the rest of the industry. “If you listen to the exaggeration, it is as if we should see AI and AI lawyers and AI software engineers, and that is not true,” he says. “If we can’t even get more than 10% in a SWE bank without pollution, that is the verification of reality for me.”

#coding #challenge #published #results #beautiful